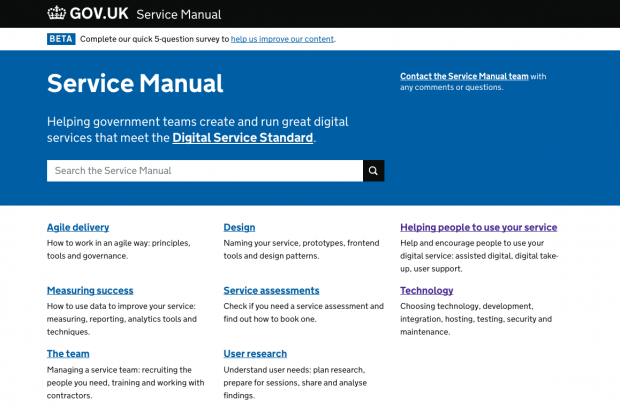

Last year I made a complete copy of the Service Manual for some user research.

One of my teams was planning some research to test some new content for the Service Manual with users. We wanted the new content we were testing to look as realistic as possible, so we wanted it included alongside existing content.

What we wanted was a full ‘copy’ of the Service Manual that we could modify easily. A bit like doing ‘Save page as…’, except for all pages in the Service Manual in one go.

While it would be possible to make the new Service Manual pages in the GOV.UK Prototype Kit, adding all Service Manual content in to the prototype would take too much time. Linking out to the live content would break the journey – participants wouldn’t be able to dip in and out of our new content.

I’ve described what I did in case anyone has a similar need to copy an existing set of pages for a prototype or user research. These instructions assume you’re using a Mac and have some familiarity with the Terminal. It’s not necessarily the best way of doing it, but it’s quick and it works.

You can see my copy here. Username: wget-blog-post, password: wget (a simple password only as we just want to stop the prototype being found accidentally).

How I did it

Use Wget to make a full copy of the Service Manual

There’s a handy command line utility called Wget that you can use to copy web pages. It’s really powerful, which sadly means you need to get your head around all the different config options. On Mac, you can get it via brew.

Here’s the command I used:

wget \ --mirror \ --recursive \ --page-requisites \ --html-extension \ --convert-links \ --user=USERNAME \ --ask-password \ --https-only \ --span-hosts \ --no-cache \ --no-dns-cache \ --no-host-directories \ --accept-regex '^https?://www.gov.uk/service-manual|^https?://assets.publishing.service.gov.uk' \ https://www.gov.uk/service-manual

Briefly, these commands mean:

| --mirror | make a full working copy |

| --recursive | follow links and copy those pages too |

| --page-requisites | also copy the CSS / JavaScript/ assets – don’t hotlink to hosted versions |

| --html-extension | add .html to files as needed |

| --convert-links | change links to point to local files |

| --user / --ask-password | optionally provide a username and ask for a password – useful if accessing a prototype that is protected |

| --https-only | restrict urls to https only. |

| --span-hosts | GOV.UK assets are on a different domain – this lets us access them |

| --no-cache / --no-dns-cache | do our best to disable caching |

| --no-host-directories | don’t use the URL in generated folders |

| --accept-regex | limit the pages we copy to those starting with gov.uk/service-manual – we don’t want all of GOV.UK! |

| https://www.gov.uk/service-manual | the URL we want to copy |

Security checks

For many sites, you’ll now have a working local copy. If you try copying GOV.UK though, it’ll look a little broken. That’s because GOV.UK web pages have some security checks added to make sure that assets like CSS and Javascript haven’t been modified without the site owner’s knowledge. They’re called subresource integrity (SRI) checks.

Most of the assets we’ve copied won’t have changed - but some of the CSS files include URLs that have been changed by Wget to point to local versions – this causes the integrity check to fail and the browser to refuse to load that file. A fix is to do a ‘find and replace’ across all the HTML and remove the integrity checks.

At the same time, we need to remove a crossorigin attribute that’s used on each asset. This attribute allows GOV.UK to access assets that are hosted on a different domain - but this check isn’t supported when viewing files locally - so we have to remove it.

In Terminal, I ran this command from the same folder I originally ran Wget:

find ./ -name "*.html" -type f -exec sed -E -i '' 's/integrity="sha.*"|crossorigin="anonymous"//g' {} \;

This command loops through all files ending in .html, and uses a regex to search for the integrity check and crossorigin attribute and removes them.

This command isn’t needed if the site being copied doesn’t use integrity and crossorigin checks.

Check it works

You should end up with a bunch of HTML and assets in the directory where you ran the command. Opening them should look just like the existing Service Manual.

Add your own content

Now I was able to modify the local files as needed to include the new content. Since this pulls in GOV.UK styles, all existing CSS classes work. It won’t support Markdown because there’s nothing to convert it to HTML.

Hosting on Heroku

We wanted our prototype to be accessible outside of GDS – for that I decided to host it on Heroku. These days I’d host on GOV.UK Platform as a Service. Heroku doesn’t natively support static HTML sites, but with a small hack, it will. To get Heroku to deploy a static site, we add an index.php file to the root of the folder with the following:

<?php header( 'Location: /service-manual.html' ) ; ?>

This tricks Heroku into thinking you’re deploying a PHP application.

Finishing touches

I added a .htaccess file to enable HTTP basic-auth. It contains:

AuthType Basic AuthName "Restricted Access" AuthUserFile /app/.htpasswd Require valid-user

And finally, a .htpasswd file to list the users who should have access:

prototype [password]

You can use a password generator to generate your passwords in an encrypted form. A robots.txt file isn’t needed as the site won’t be crawlable by search engines.

All done

I now have my own shiny copy of the Service Manual that I can modify as needed.

Things that won’t work

Wget makes static copies of files – anything dynamic done by the server can’t be copied. So things like search won’t work. If a site has a form input that controls which page the user is shown next (like a transactional service), it also won’t work. It works by following links, starting with the parent page. If a page isn’t linked from any other pages, Wget won’t find it.

What about preview environments?

At GDS we keep a preview version of GOV.UK running that we call ‘Integration’. It’s used for testing new changes and can be used in a limited way to preview content. It includes all the regular publishing tools that GOV.UK publishers have access to.

Why didn’t we use it?

We tried. The challenge is that Integration is reset (wiped) every night at midnight. We wanted to test across multiple days – so would’ve had to recreate our changes each day.

Why not run the real Service Manual app?

The real Service Manual is coupled with the GOV.UK publishing system, and would have probably taken a bit of time to get up and running and for us to understand how to make the changes we needed. Making a hacky copy of just the HTML output was far quicker and easier for our needs.

Final thoughts

Wget is really powerful and useful - but there’s a load of options and it can seem daunting to use at first. When playing about I had to keep tweaking the parameters until I got an output that worked correctly. However, once you’ve got it setup, it works really well. For some sorts of prototyping this can make a whole range of tasks much easier.

1 comment

Comment by Kevin O'Neill posted on

Wow. A bit daunting - but worth buying a beer for my devs to help me do this for user research. Thanks !