We’re updating our guidance on how to measure user satisfaction and completion rate.

Right now, we measure completion and satisfaction using a Done page, hosted on GOV.UK. We’ve learned a lot through transforming 25 exemplar services and measuring their performance.

In short, Done pages aren’t working – it’s time to update our approach.

Here are some of the problems we’ve identified and how we’re fixing them.

Completion isn’t always from A to B

Services measure completion by counting users starting at the Start page and completing at the Done page.

But there’s usually more than one way to complete a service, something a single Start and Done page doesn’t allow for. For example, you might claim a benefit successfully, or you might be told you are not eligible.

In both cases, the transaction did what it was supposed to do. This is our new definition of a completed transaction.

Failed transactions are now limited to errors in our system and drop-outs, where someone just closes their browser or gives up.

We’ll be making sure all new and redesigned services are measuring completion rate appropriately at Service Assessments.

Read the new guidance on completion rate

We were only asking successful users if they were satisfied

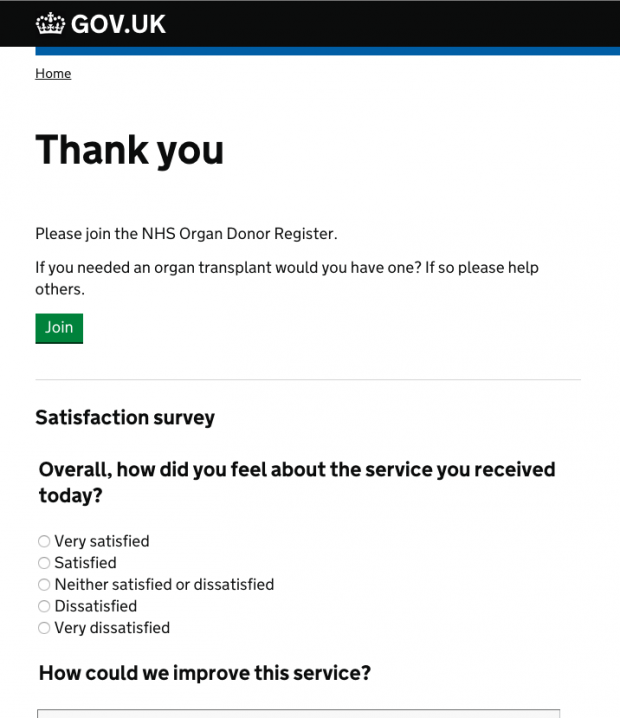

We were asking users to give feedback from the Done page – a page you could only reach if you completed using the service successfully.

This was a good way to make our services look really good, but a terrible way to get the information needed to improve them.

We were missing out on the most important feedback – from the users who failed to complete the transaction or otherwise got stuck.

We’ve changed the guidance in the Service Manual. Now we’re asking for feedback in many more places.

We’re prompting users to give feedback at all of the service endpoints – not just where the user was successful. For example:

- Your Carer’s Allowance claim has been successful

- You are not eligible for Carer’s Allowance

- Error – we couldn’t find your National Insurance number

And we’re letting users to give feedback from anywhere in the service through Feedback links in the footer on every page of your service.

We’ll be making sure all new and redesigned services are including these Feedback links at Service Assessments.

Read the new guidance on user satisfaction

We were using a dark pattern to get users to click through to Done pages

We were recommending services add a ‘Finish’ or ‘Finished’ button to the end of their transaction to get users to click through to their Done page on GOV.UK

The Finish button is a dark pattern – a pattern designed to trick users into performing an action. Dark patterns achieve business needs, not user needs.

It’s a dark pattern because it looks like you have to click it to finish the transaction. You don’t – you’re already on a transaction end page.

We’re recommending a new pattern that tells users what to expect:

What did you think of this service? (takes 30 seconds)

The link will take you through to a short feedback survey.

Done pages are a dark pattern, too

Done pages are a dark pattern because they mainly achieve government needs, not user needs. The intention of Done pages was to measure completion rate and user satisfaction. But the low click-through rate meant that Done pages weren’t a good way to measure completion rate.

Instead, services are measuring completion using their web analytics. Analytics packages typically come with a ‘goals’ feature for measuring completion.

We’re renaming Done pages to Feedback pages, to make it clear what they do. In the future, we’ll be looking at whether a centrally-hosted survey is the best way to measure satisfaction and get feedback.

This is just an iteration

This is the first update to the KPIs in some time, but it’s not the last.

In particular, we’re aware that the way we recommend measuring satisfaction right now isn’t ideal.

In the future, we’re looking at:

- Making a clear distinction between the satisfaction score and user feedback

- Iterating the wording of the satisfaction and feedback questions

- Providing the ability to add custom, service-specific survey questions

- Recommending that services sample users using statistically appropriate methods, rather than prompting everybody

- Using third-party survey tools

7 comments

Comment by Tom Wynne-Morgan posted on

It might be worth considering integrating an understanding of Assisted Digital support as part of the feedback. I.e an option to say whether the transaction/service was completed as part of an assisted digital service.

Comment by John Turnbull posted on

In the spirit of good services being verbs, perhaps the 'Feedback' link should be 'Send feedback'?

Comment by Jason Kapranos posted on

When will services have the ability to add custom questions. My service would like to ask the users a question similar to the one Tom mentioned above to help gather some Assisted Digital insight.

Comment by Henry Hadlow posted on

Hi Jason, we can't do this our side because custom questions could include personal information, which we couldn't hold centrally. We'd like to get a service to make their own simple feedback app and open source it, so others can run it and fork it. Would you be interested? Henry – @henryhadlow

Comment by Damian Gilkes posted on

Henry, we on the DWP Secure Comms project are wanting to set up a Feedback link for a service we developing to allow GPs to submit medical forms electronically - who do we contact to find out how we go about setting up the Feedback link

Comment by Damian Gilkes posted on

We on the DWP Secure Comms project are looking to set up a feedback page. Please can someone lets us know how we go about it.

Comment by Henry Hadlow posted on

Hi Damian, sorry I missed your previous comment. You can get a Feedback page by following the link 'request a content change' on this page: https://www.gov.uk/service-manual/measurement/user-satisfaction.html